CIRCA:Interactivity and Touch

From CIRCA

Contents |

Haptic Displays

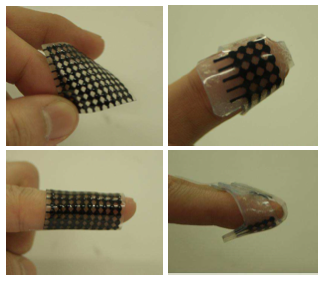

Wearable Haptic Display - Koo, I., Jung, K., Koo, J., Nam, J., Lee, Y., & Choi, H. R. (2006)

This device is a small piece of plastic that may be worn on a finger. Each device is made up of a group of pads that expand when current is run through it. Since each pad is surrounded by a harder plastic material, the pad will bulge when expanded. By controlling the amount of current passed through the device, it is possible to create different haptic patterns such as braille.

The paper continues to describe the physical characteristics of the device and how it is manufactured.

With regards to pros and cons of the device, it is noted in the paper that it is cheap to produce, physically flexible, and easy to manufacture. Drawbacks mentioned by the paper mention a high power requirement. Furthermore, it is questionable whether or not the device would function well as a wearable device as pictured. One would assume that a user would have to brush their fingertip across the surface in order to "read" the display. If the device is being worn on the fingers this is not possible; Thus, the device must be able to simulate the feel of brushing over bumps by quickly raising and lowering each "pixel" in succession (akin to persistance of vision displays).

However, the paper mentions that the technology could be applied in a variety of situations such as: interfaces for household appliances, virtual reality, and automobile interfaces. It does not mention specifically how the device could be integrated into each of those applications.

Reference:

Koo, I., Jung, K., Koo, J., Nam, J., Lee, Y., & Choi, H. R. (2006). Wearable tactile display based on soft actuator. In Robotics and Automation, 2006. ICRA 2006. Proceedings 2006 IEEE International Conference on (pp. 2220 -2225).

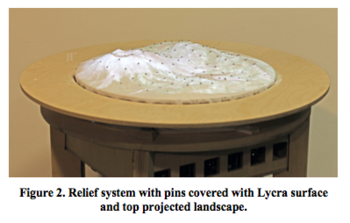

Relief: A Scalable Actuated Shape Display - Leithinger, D., & Ishii, H.

Relief is a tabletop display that changes shape, like a topographical diorama that changes. "The tabletop surface is actuated by an array of 120 motorized pins, which are controlled with a low-cost, scalable platform built upon open-source hardware and software tools. Each pin can be addressed individually and senses user input like pulling and pushing." The canvas, or cloth, stretch over the pins can have images projected onto it. In the image to the right, a mountain landscape is displayed.

The device leverages open source hardware in the form of the Arduino. 32 Arduinos, and accompanying shields by Adafruit are used to control the motors that drive each pin.

The researchers feel that one possible application of such a device would be geospatial exploration. No user study had been completed at the time of the proceedings publication. However, the researchers feel that this is the first step in developing a low-cost shape display solution.

Reference:

Leithinger, D., & Ishii, H. (2010). Relief: A Scalable Actuated Shape Display. In TEI '10: Proceedings of the fourth international conference on Tangible, embedded, and embodied interaction.

Controllers

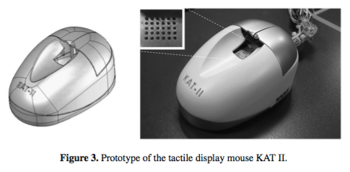

KAT II: Tactile Display Mouse - Gi-Hun Yang and Dong-Soo Kwon

This mouse is an attempt at creating a device which facilitates experiments regarding temperature and vibrational/textural feedback. The mouse has two features that set it apart from commodity computer mice. First, the mouse provides to the user thermal information by either heating up or cooling down. Second, the mouse has a haptic strip embedded into the middle of the mouse that the user may feel using their fingers. Through a combination of both systems, the mouse is able to emulate different surfaces. For example, a glass surface would be presented as a surface that is cold and smooth whereas sand could be presented as rough and warm.

The paper claims that users were able to successfully differentiate materials using thermal properties alone. The experiment requested that participants pick a material from a list of materials based on thermal information only. Furthermore, a second study found that thermal properties affected the perception of vibro-tactile feedback. Based on this, they conclude that temperature does indeed affect how a user will perceive texture.

The paper does not claim that the mouse can accurately reproduce different textures through temperature and vibro-tactile feedback; Rather, the mouse is intended as a tool for further experimentation in creating realistic simulations of materials through texture and temperature.

Reference:

Gi-Hun, Y., & Dong-Soo, K. (2008). KAT II: Tactile Display Mouse for Providing Tactile and Thermal Feedback. Advanced Robotics, 22(8), 851 - 865.

Z-Stretch - Chang, A. & Ishii, H.

This controller is a piece of fabric that generates different sounds based on how it is stretched.This novel interface explores ways in which one might treat music as a sheet of cloth and the paper shows that a simple technology coupled with appropriate interaction can provide an expressive musical interface.

To make the controller, stretch sensors were stitched into the fabric along each edge. When pulled, scrunched, or otherwise manipulated, the resistance of each sensor would change and This change in resistance was mapped to a particular characteristic of two sound loops. The sides were mapped to the volume of a single sound loop while the top was mapped to pitch and the bottom was mapped to speed.

The researchers note that different mappings were possible. They also noted the interesting mechanical constraint in that manipulating one vector will always manipulate another by some degree since it is not really possible to isolate any given edge from the others. Furthermore, concerns with the device include stitch and material wear and tear.

The paper ends with the idea that perhaps we should also focus on experimenting with new interactions on existing interfaces rather than creating new ones. Furthermore, they conclude that even with such a simple interface, there are a myriad of different possible mappings and interactions.

Reference:

Chang, A., & Ishii, H. (2007). Zstretch: A Stretchy Fabric Music Controller. In Proceedings of the 2007 Conference on New Interfaces for Musical Expression (NIME07). Presented at the 2007 Conference on New Interfaces for Musical Expression, New York, NY, USA.

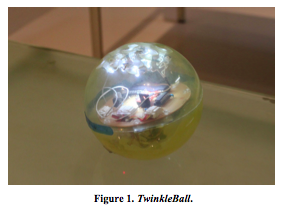

TwinkleBall - Yamaguchi, T., Kobayashi, T., Ariga, A., & Hashimoto, S.

The TwinkleBall is a "wireless musical interface driven by grasping forces and human motion." Embedded inside of a clear and squishy plastic ball are a number of sensors that sense grasping force and motion. By squeezing the ball, the note being played is changed. The volume is calculated from the movement, or orientation of the device.

The grasping force exerted on the system is detected in a non-traditional manner. On the inside of the ball, there are LEDs mounted to the shell and a photoresistor mounted in the center of the ball. As the user squeezes the outside, the LEDs get closer to the photoresistor which then detects a brighter light.

Since the TwinkleBall has a transparent shell, ambient lighting also affects the system.

For real performances, the researchers mention that they must also be able to control tempo using the system. This is something that they currently have not mapped to the system.

Reference:

Yamaguchi, T., Kobayashi, T., Ariga, A., & Hashimoto, S. (n.d.). TwinkleBall: A Wireless Musical Interface for Embodied Sound Media. Proceedings of the 2010 Conference on New Interfaces for Musical Expression. Presented at the 2010 Conference on New Interfaces for Musical Expression, Sydney, Australia.

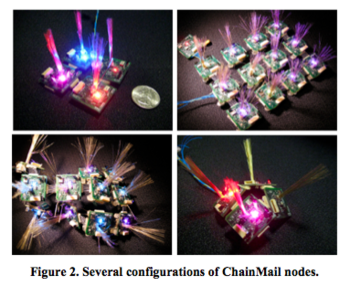

ChainMail - Mistree, B. F. T., & Paradiso, J. A.

ChainMail is a modular circuit board loaded with sensors. It is intended to be placed in a mesh laid ontop of an existing surface to add sensing capabilities to that surface.

Each node is 1" x 1" square and connected to other nodes with flexible interconnects. Each node includes a "embedded processor together with a suite of thirteen sensors, providing dense, multimodal capture of proximate and contact phenomena."

The sensors include: "three pressure sensors to determine vector force, a sound sensor, a light sensor, a temperature sensor, a bend sensor, and a whisker sensor capable of monitoring airflow or proximity."

The paper continues by testing the system in order to see which sensors perform the best. They found that the temperature sensors and bend sensors do not function as well as they would like. Furthermore, the wires used to connect the nodes together were not robust enough. Another solution will have to be found such as conductive thread, springs, or flexible circuit board material.

As for applications, the researchers envisioned this system as a lining that could be added to objects or surfaces "for applications ranging from robotics to telepresence." Furthermore, the nature of the system allows it to be a plaform on which experiments related to: "networking, communications, scalability and control questions in large sensor grid deployments."

In addition to serving as a scalable sensate lining that can add rich contact and non-contact sensing to an object or surface (e.g., for applications ranging from robotics to telepresence), this reconfigurable sensor network offers the opportunity for the exploration and testing of networking, communications, scalability and control questions in large sensor grid deployments.

Reference:

Mistree, B. F. T., & Paradiso, J. A. (2010). ChainMail – A Configurable Multimodal Lining to Enable Sensate Surfaces and Interactive Objects. In TEI '10: Proceedings of the fourth international conference on Tangible, embedded, and embodied interaction.

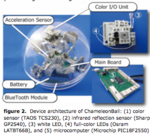

ChameleonBall - Sukada, K., & Oki, M.

The Chameleon Ball is a controller developed in order to allow users to "interact with colors in the real world." While the paper is short and does not provide much content, the device itself is interesting. In short, it consists of a series of input/output systems consisting of color sensors and LEDs. The device is able to detect the colors of it's surroundings and then output the same color using the LEDs.

The applications developed by the researchers allow users to use the ball to pick up, mix, and output colors around a space. For example, the user could use the ball to input a few different colors around the physical space, shake the ball to mix the colors, and then throw the ball up and catching it in order to change the room lighting to that color.

Reference:

Tsukada, K., & Oki, M. (2010). Chameleonball. In TEI '10: Proceedings of the fourth international conference on Tangible, embedded, and embodied interaction.

Environments

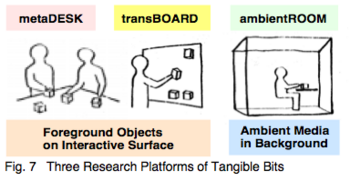

Tangible Bits - Ishii, H., & Ullmer, B.

This project is a collection of individual projects related to interactivity, ergonomics, haptics, and data visualization. The collection takes the form of a room or office that is fully optimized "to bridge the gaps between both cyberspace and the physical environment, as well as the foreground and background of human activities". Tangible Bits is made up of three different categories of components: interactive surfaces, intelligent objects (tangible computing), and ambient information. The name of each of these components is: metaDESK, transBOARD, and ambientROOM.

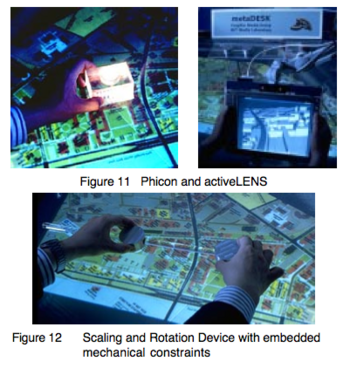

metaDESK

The metaDESK attempts to "push the GUI back into the real world." The metaDESK is a collection of different physical objects and a rear-projection screen. The objects include:

- Phicons, or physical icons, are used to manipulate and control information displayed on the metaDESK.

- activeLENS, an LCD display mounted on a flexible arm used to provide different views of the information.

- passiveLENS, a transparent plexiglass device that changes the view on the metaDESK. The differentiation between this and the activeLENS is not clear to me.

An example application provided in the paper is called the Tangible Geospace, a mapping software. A detailed explanation can be found in the paper; However, I will provide a short summary here.

Phicons are used to anchor the map. For example, if you place a phicon of building A onto the metaDESK, the map will be displayed oriented corresponding to the placement of phicon A. If then phicon B is placed onto the map, the map is re-oriented so that both building A and building B are drawn ontop of their correpsonding phicons. In this application, the activeLENS provides a 3d view of the 2d map presented on the metaDESK. The passiveLENS is used to overlay different versions of the map (ie. satellite, topographical, or drawn) in specific places.

transBOARD

The transBOARD is a networked whiteboard meant to "explore the concept of interactive surfaces which absorb information from the physical world, transforming this data into bits and distributing it into cyberspace".

The transBOARD accomplishes this by using phicons. The phicons for this system take the shape of magnetic cards and allow drawings on the whiteboard to be saved onto the card and reopened later or transmitted over the internet to remote locations.

The paper claims that the difference between the transBOARD and metaDESK is that the transBOARD is simply for transforming physical data to digital bits (one-way) whereas the metaDESK can transform digital bits into physical data as well (two-way). I would challenge this claim since the magnetic cards may be used to display drawing data (stored on a server) onto a transBOARD or computer.

ambientROOM

"The ambientROOM complements the graphically-intensive, cognitively-foreground interactions of the metaDESK by using ambient media – ambient light, shadow, sound, airflow, water flow – as a means for communicating information at the periphery of human perception."

The paper suggests that phicons, representing sources of information such as web-hits, may be placed near objects in the room, such as a speaker which will then present the phicon's data to the user in an ambient fashion. The example given in the paper involves a car phicon tracking web-hits to a car sales website. This car phicon, when placed beside a speaker, will cause the speaker to play a raindrop sound when there is a hit on the website. The sound would scale from individual drops to a downpour.

Reference:

Ishii, H., & Ullmer, B. (1997). Tangible bits: towards seamless interfaces between people, bits and atoms. In Proceedings of the SIGCHI conference on Human factors in computing systems, CHI '97 (pp. 234–241). New York, NY, USA: ACM.